Hello,

I created a little video tutorial to model and render a folded piece of paper for a project I’m working on…

Here it is, enjoy!

Tag: 3d

Hello again!

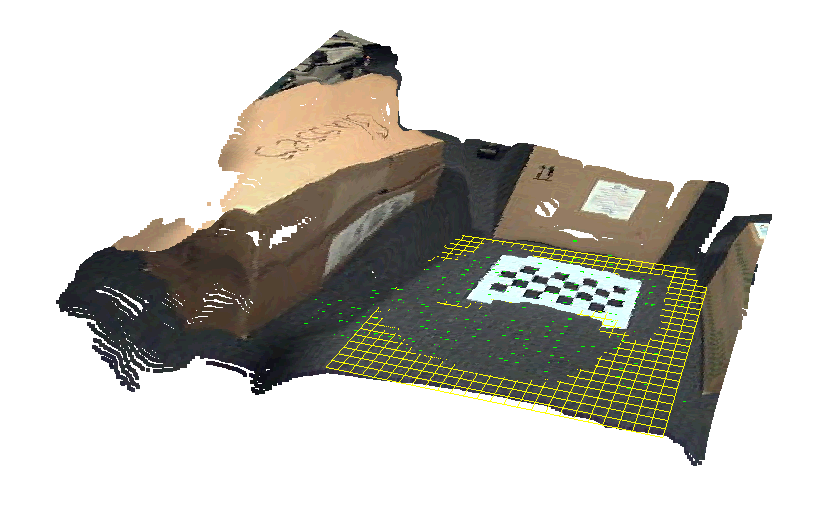

After a long hiatus I’m back with an update. Recently I’ve been upgrading the Structure-from-Motion Toy Library (https://github.com/royshil/SfM-Toy-Library/) to OpenCV 3.x from OpenCV 2.4.x.

Years ago I wanted to implement PTAM. I was young and naïve 🙂

Well I got a few moments to spare on a recent sleepless night, and I set out to implement the basic bootstrapping step of initializing a map with a planar object – no known markers needed, and then tracking it for augmented reality purposes.

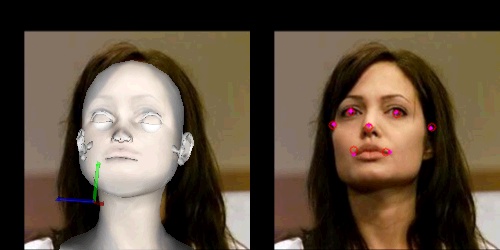

So I was contacted earlier by someone asking about the Head Pose Estimation work I put up a while back. And I remembered that I needed to go back to that work and fix some things, so it was a great opportunity.

So I was contacted earlier by someone asking about the Head Pose Estimation work I put up a while back. And I remembered that I needed to go back to that work and fix some things, so it was a great opportunity.

I ended up making it a bit nicer, and it’s also a good chance for us to review some OpenCV-OpenGL interoperation stuff. Things like getting a projection matrix in OpenCV and translating it to an OpenGL ModelView matrix, are very handy.

Let’s get down to the code.

Hello

Hello

This time I’ll discuss a basic implementation of a Structure from Motion method, following the steps Hartley and Zisserman show in “The Bible” book: “Multiple View Geometry”. I will show how simply their linear method can be implemented in OpenCV.

I treat this as a kind of tutorial, or a toy example, of how to perform Structure from Motion in OpenCV.

See related posts on using Qt instead of FLTK, triangulation and decomposing the essential matrix.

Update 2017: For a more in-depth tutorial see the new Mastering OpenCV book, chapter 3. Also see a recent post on upgrading to OpenCV3.

Let’s get down to business…

Hi

Hi

I sense that a lot of people are looking for a simple triangulation method with OpenCV, when they have two images and matching features.

While OpenCV contains the function cvTriangulatePoints in the triangulation.cpp file, it is not documented, and uses the arcane C API.

Luckily, Hartley and Zisserman describe in their excellent book “Multiple View Geometry” (in many cases considered to be “The Bible” of 3D reconstruction), a simple method for linear triangulation. This method is actually discussed earlier in Hartley’s article “Triangulation“.

I implemented it using the new OpenCV 2.3+ C++ API, which makes it super easy, and here it is before you.

Edit (4/25/2015): In a new post I am using OpenCV’s cv::triangulatePoints() function. The code is available online in a gist.

Edit (6/5/2014): See some of my more recent work on structure from motion in this post on SfM and that post on the recent Qt GUI and SfM library.

Update 2017: See the new Mastering OpenCV3 book with a deeper discussion, and a more recent post on the implications of using OpenCV3 for SfM.

Hi!

Hi!

I’ve been working on implementing a face image relighting algorithm using spherical harmonics, one of the most elegant methods I’ve seen lately.

I start up by aligning a face model with OpenGL to automatically get the canonical face normals, which brushed up my knowledge of GLSL. Then I continue to estimating real faces “spharmonics”, and relighting.

Let’s start!

Update: check out my new post about this https://www.morethantechnical.com/2012/10/17/head-pose-estimation-with-opencv-opengl-revisited-w-code/

Hi

Just wanted to share a small thing I did with OpenCV – Head Pose Estimation (sometimes known as Gaze Direction Estimation). Many people try to achieve this and there are a ton of papers covering it, including a recent overview of almost all known methods.

I implemented a very quick & dirty solution based on OpenCV’s internal methods that produced surprising results (I expected it to fail), so I decided to share. It is based on 3D-2D point correspondence and then fitting of the points to the 3D model. OpenCV provides a magical method – solvePnP – that does this, given some calibration parameters that I completely disregarded.

Here’s how it’s done

Hi

Hi

Been working hard at a project for school the past month, implementing one of the more interesting works I’ve seen in the AR arena: Parallel Tracking and Mapping (PTAM) [PDF]. This is a work by George Klein [homepage] and David Murray from Oxford university, presented in ISMAR 2007.

When I first saw it on youtube [link] I immediately saw the immense potential – mobile markerless augmented reality. I thought I should get to know this work a bit more closely, so I chose to implement it as a part of advanced computer vision course, given by Dr. Lior Wolf [link] at TAU.

The work is very extensive, and clearly is a result of deep research in the field, so I set to achieve a few selected features: Stereo initialization, Tracking, and small map upkeeping. I chose not to implement relocalization and full map handling.

This post is kind of a tutorial for 3D reconstruction with OpenCV 2.0. I will show practical use of the functions in cvtriangulation.cpp, which are not documented and in fact incomplete. Furthermore I’ll show how to easily combine OpenCV and OpenGL for 3D augmentations, a thing which is only briefly described in the docs or online.

Here are the step I took and things I learned in the process of implementing the work.

Update: A nice patch by yazor fixes the video mismatching – thanks! and also a nice application by Zentium called “iKat” is doing some kick-ass mobile markerless augmented reality.