If you’re a fan of OBS (Open Broadcaster Software), you may already be familiar with its vast library of plugins that enhance its functionality and provide added features. One such plugin that I recently developed is the URL API source plugin. This plugin allows you to fetch information from a URL and display it in your OBS stream. In this blog post, we will take a closer look at the source code for this plugin and understand how it works.

Category: qt

I spent an entire day getting OpenGL 4 to display data from a VAO with VBOs so I thought I’d share the results with you guys, save you some pain.

I’m using the excellent GL wrappers from Qt, and in particular QGLShaderProgram.

This is pretty straightforward, but the thing to remember is that OpenGL is looking for the vertices/other elements (color? tex coords?) to come from some bound GL buffer or from the host. So if your app is not working and nothing appears on screen, just make sure GL has a bound buffer and the shader locations match up and consistent (see the const int I have on the class here).

You already know I love libQGLViewer. So here a snippet on how to do AR in a QGLViewer widget. It only requires a couple of tweaks/overloads to the plain vanilla widget setup (using the matrices properly, disable the mouse binding) and it works.

The major problems I recognize with getting a working AR from OpenCV’s intrinsic and extrinsic camera parameters are their translation to OpenGL. I saw a whole lot of solutions online, and I contributed from my own experience a while back, so I want to reiterate here again in the context of libQGLViewer, with a couple extra tips.

So I needed to speed up / slow down an audio stream I had (speech generated with Flite TTS) and naively I thought it would suffice to simply sample it at the right intervals and interpolate.

I quickly discovered that just re-sampling won’t do because changing frequency also changes pitch proportionally. And then I discovered the world of Time Scaling in audio and it’s many algorithms and approaches to change the tempo without changing pitch.

To my surprise there were a number of ready made free libraries that do it, but the first one I tried – RubberBand – did not work out, it had too many dependencies I simply couldn’t be bothered compiling it for the Mac. But SoundTouch, well it had a Homebrew formula so it won by default.

I wrote a little simple wrapper around it, that interfaces nicely with Qt.

Let’s see what’s going on there

For those of you still interested, I’ve made the move to using Qt and QGLViewer in the SfM-Toy-Lib project. Getting rid of PCL dependency (I think it’s a bloated library), and burying FLTK long in the past, I feel much better about it now.

For those of you still interested, I’ve made the move to using Qt and QGLViewer in the SfM-Toy-Lib project. Getting rid of PCL dependency (I think it’s a bloated library), and burying FLTK long in the past, I feel much better about it now.

Get it on github: https://github.com/royshil/SfM-Toy-Library

So QSerialPort only became part of Qt core on Qt5.1, but what should all those Qt4 projects do for an easy serial port access?

So QSerialPort only became part of Qt core on Qt5.1, but what should all those Qt4 projects do for an easy serial port access?

There is the excellent QExtSerialPort project, but it only has a QMake build system, and I like CMake!

Not to worry, a CMake build is easy to set up…

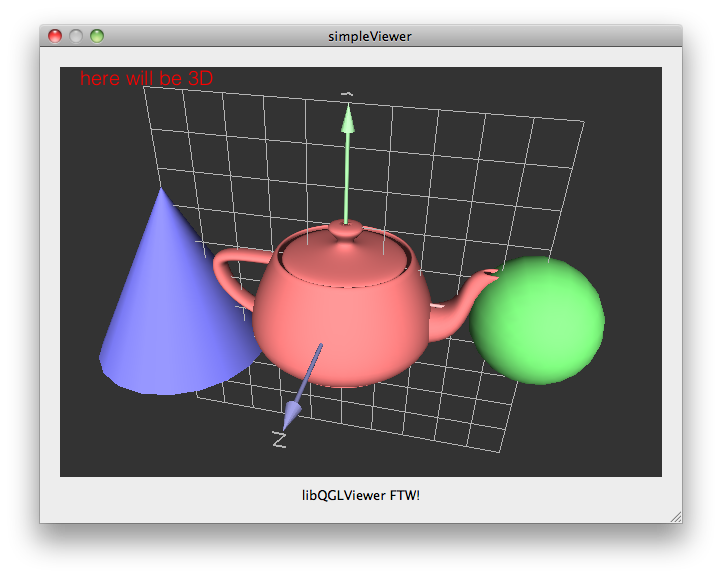

While looking for a very simple way to start up an OpenGL visualizer for quick 3D hacks, I discovered an excellent library called libQGLViewer, and I want to quickly show how easy it is to setup a 3D environment with it. This library provides an easy to access and feature-rich QtWidget you can embed in your UIs or use stand-alone (this may sound like a marketing thing, but they are not paying me anything 🙂

While looking for a very simple way to start up an OpenGL visualizer for quick 3D hacks, I discovered an excellent library called libQGLViewer, and I want to quickly show how easy it is to setup a 3D environment with it. This library provides an easy to access and feature-rich QtWidget you can embed in your UIs or use stand-alone (this may sound like a marketing thing, but they are not paying me anything 🙂

This is based on the library’s own examples at: http://www.libqglviewer.com/examples/index.html, and some of the examples that come with the library source itself.

Let’s see how it’s done

As my search for the best platform to roll-out my new face detection concept continues, I decided to give ol’ Qt framework a go.

I like Qt. It’s cross-platform, a clear a nice API, straightforward, and remindes me somewhat of Apple’s Cocoa.

My intention is to get some serious face detection going on mobile devices. So that means either the iPhone, which so far did a crummy job performance-wise, or some other mobile device, preferably linux-based.

This led me to the decision to go with Qt. I believe you can get it to work on any linux-ish platform (limo, moblin, android), and since Nokia baught Trolltech – it’s gonna work on Nokia phones soon, awesome!

Lets get to the details, shall we?

I recently had to build a demo client that shows short video messages for Ubuntu environment.

After checking out GTK+ I decided to go with the more natively OOP Qt toolbox (GTKmm didn’t look right to me), and I think i made the right choice.

So anyway, I have my video files encoded in some unknown format and I need my program to show them in a some widget. I went around looking for an exiting example, but i couldn’t find anything concrete, except for a good tip here that led me here for an example of using ffmpeg’s libavformat and libavcodec, but no end-to-end example including the Qt code.

The ffmpeg example was simple enough to just copy-paste into my project, but the whole painting over the widget’s canvas was not covered. Turns out painting video is not as simple as overriding paintEvent()…

Firstly, you need a separate thread for grabbing frames from the video file, because you won’t let the GUI event thread do that.

That makes sense, but when the frame-grabbing thread (I called VideoThread) actually grabbed a frame and inserted it somewhere in the memory, I needed to tell the GUI thread to take that buffered pixels and paint them over the widget’s canvas.

This is the moment where I praise Qt’s excellent Signals/Slots mechanism. So I’ll have my VideoThread emit a signal notifying some external entity that a new frame is in the buffer.

Here’s a little code: