Hello

Sorry for the bombardment of posts, but I want to share some stuff I’ve been working on lately, so when I find time I just shoot the posts out.

So this time I’ll talk shortly about how to get an estimation of a rigid transformation between two clouds, that potentially are also of different scale. You will end up with a rigid transformation (Rotation Translation) and a scale factor, son in fact it will be a Similarity Transformation. We will first find the right scale, and then find the right transformation, given there is one (but we will find the best transformation there is).

Category: graphics

Hello

Hello

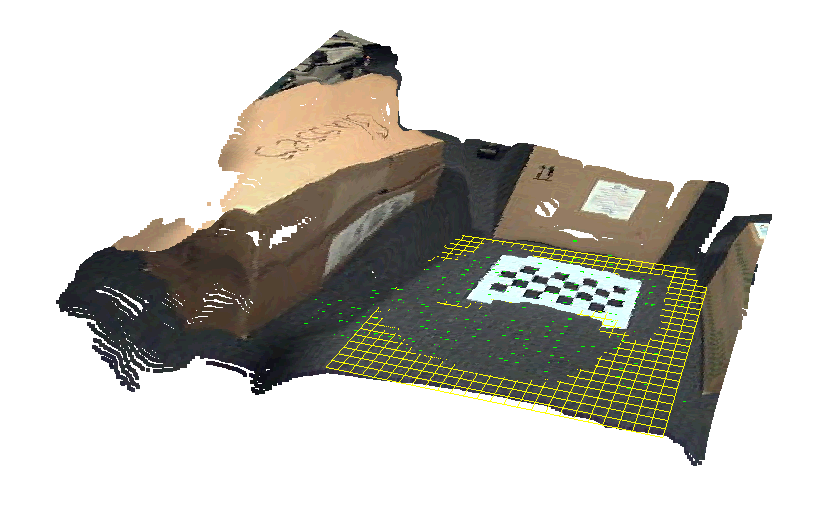

This time I’ll discuss a basic implementation of a Structure from Motion method, following the steps Hartley and Zisserman show in “The Bible” book: “Multiple View Geometry”. I will show how simply their linear method can be implemented in OpenCV.

I treat this as a kind of tutorial, or a toy example, of how to perform Structure from Motion in OpenCV.

See related posts on using Qt instead of FLTK, triangulation and decomposing the essential matrix.

Update 2017: For a more in-depth tutorial see the new Mastering OpenCV book, chapter 3. Also see a recent post on upgrading to OpenCV3.

Let’s get down to business…

Hi

Hi

I sense that a lot of people are looking for a simple triangulation method with OpenCV, when they have two images and matching features.

While OpenCV contains the function cvTriangulatePoints in the triangulation.cpp file, it is not documented, and uses the arcane C API.

Luckily, Hartley and Zisserman describe in their excellent book “Multiple View Geometry” (in many cases considered to be “The Bible” of 3D reconstruction), a simple method for linear triangulation. This method is actually discussed earlier in Hartley’s article “Triangulation“.

I implemented it using the new OpenCV 2.3+ C++ API, which makes it super easy, and here it is before you.

Edit (4/25/2015): In a new post I am using OpenCV’s cv::triangulatePoints() function. The code is available online in a gist.

Edit (6/5/2014): See some of my more recent work on structure from motion in this post on SfM and that post on the recent Qt GUI and SfM library.

Update 2017: See the new Mastering OpenCV3 book with a deeper discussion, and a more recent post on the implications of using OpenCV3 for SfM.

Hi!

Hi!

I’ve been working on implementing a face image relighting algorithm using spherical harmonics, one of the most elegant methods I’ve seen lately.

I start up by aligning a face model with OpenGL to automatically get the canonical face normals, which brushed up my knowledge of GLSL. Then I continue to estimating real faces “spharmonics”, and relighting.

Let’s start!

Hi!

Hi!

I would like to present something I have been working on recently, a work that immensely affect what I wrote in the blog in the past two years…

To use it:

Go on this page,

Watch the short instruction video,

download the application (MacOSX-Intel-x64 Win32)

and make yourself a model!

It takes just a couple of minutes and it’s very simple…

This work is an academic research project, Please please, take the time to fill out the survey! It is very short..

The results of the survey (the survey alone, no photos of your work) will possibly be published in an academic paper.

Note: No information is sent anywhere in any way outside of your machine (you may even unplug the network). All results are saved locally on your computer, and no inputs are recorded or transmitted. The application contains no malware. The source is available here.

Note II: All stock photos of models used in the application are released under Creative Commons By-NC-SA 2.0 license. Creator: http://www.flickr.com/photos/kk/. If you wish to distribute your results, they should also be released under a CC-By-NC-SA 2.0 license.

Thank you!

Roy.

So, been working hard on my projects, and discovered some interesting things in Android possibilities for frame animation. Last time I was using an HTML approach, because of memory consumption issues with using ImageViews. However now my approach is using View.onDraw(Canvas) to draw BMPs straight off files, in an asynchronous way, and it seems to work pretty good.

So, been working hard on my projects, and discovered some interesting things in Android possibilities for frame animation. Last time I was using an HTML approach, because of memory consumption issues with using ImageViews. However now my approach is using View.onDraw(Canvas) to draw BMPs straight off files, in an asynchronous way, and it seems to work pretty good.

Let me tell you how I did it

I found a nice little trick to ease the work with the very noisy depth image the Kinect is giving out. The image is filled with these “blank” values that basically note where the data is unreadable. The secret is to use inpainting to cover these areas and get a cleaner image. And as always, no need to dig deep – OpenCV has it all included.