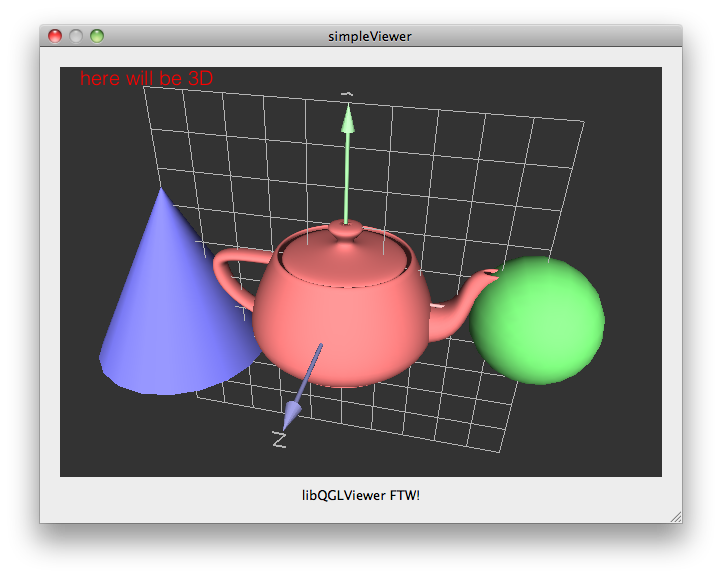

While looking for a very simple way to start up an OpenGL visualizer for quick 3D hacks, I discovered an excellent library called libQGLViewer, and I want to quickly show how easy it is to setup a 3D environment with it. This library provides an easy to access and feature-rich QtWidget you can embed in your UIs or use stand-alone (this may sound like a marketing thing, but they are not paying me anything 🙂

While looking for a very simple way to start up an OpenGL visualizer for quick 3D hacks, I discovered an excellent library called libQGLViewer, and I want to quickly show how easy it is to setup a 3D environment with it. This library provides an easy to access and feature-rich QtWidget you can embed in your UIs or use stand-alone (this may sound like a marketing thing, but they are not paying me anything 🙂

This is based on the library’s own examples at: http://www.libqglviewer.com/examples/index.html, and some of the examples that come with the library source itself.

Let’s see how it’s done

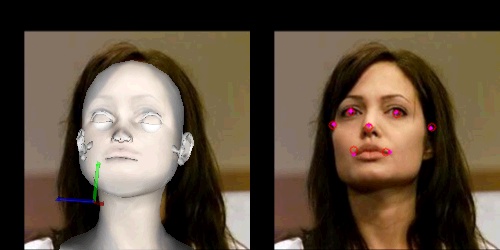

So I was contacted earlier by someone asking about the Head Pose Estimation work I put up a while back. And I remembered that I needed to go back to that work and fix some things, so it was a great opportunity.

So I was contacted earlier by someone asking about the Head Pose Estimation work I put up a while back. And I remembered that I needed to go back to that work and fix some things, so it was a great opportunity.