I want to share a small piece of code to do Laplacian Blending using OpenCV. It’s one of the most basic and canonical methods of image blending, and is a must exercise for any computer graphics student.

I want to share a small piece of code to do Laplacian Blending using OpenCV. It’s one of the most basic and canonical methods of image blending, and is a must exercise for any computer graphics student.

Author: Roy

So, been working hard on my projects, and discovered some interesting things in Android possibilities for frame animation. Last time I was using an HTML approach, because of memory consumption issues with using ImageViews. However now my approach is using View.onDraw(Canvas) to draw BMPs straight off files, in an asynchronous way, and it seems to work pretty good.

So, been working hard on my projects, and discovered some interesting things in Android possibilities for frame animation. Last time I was using an HTML approach, because of memory consumption issues with using ImageViews. However now my approach is using View.onDraw(Canvas) to draw BMPs straight off files, in an asynchronous way, and it seems to work pretty good.

Let me tell you how I did it

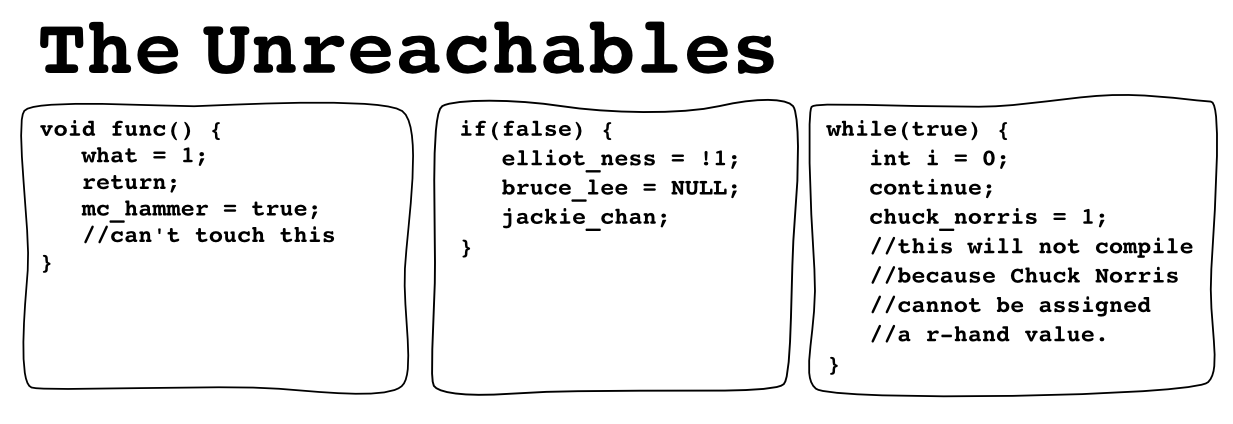

The Unreachables [comic]

Just wanted to share of some code I’ve been writing.

So I wanted to create a food classifier, for a cool project down in the Media Lab called FoodCam. It’s basically a camera that people put free food under, and they can send an email alert to the entire building to come eat (by pushing a huge button marked “Dinner Bell”). Really a cool thing.

OK let’s get down to business.

Hi,

Wanted to report on some progress I made connecting the Samsung Galaxy S Vibrat smartphone and a 3M MPro110 pico (pocket) projector.

It is a fairly simple process, but I couldn’t really find any schematics of the video ports on either the phone (the 3.5mm headphones jack) or the projector (3.5mm composite video-in jack). So if anyone is trying to do this, they can find the wiring schematics here.

Hi,

I wanted to put up a quick note on how to use Kalman Filters in OpenCV 2.2 with the C++ API, because all I could find online was using the old C API. Plus the kalman.cpp example that ships with OpenCV is kind of crappy and really doesn’t explain how to use the Kalman Filter.

I’m no expert on Kalman filters though, this is just a quick hack I got going as a test for a project. It worked, so I’m posting the results.

Hi,

Just sharing a snippet of code. Part of a project I’m doing, I need to analyse the links in the Wikipedia corpus. While using the API is one solution, it doesn’t retain the order of where links appear in the page. It also returns links that are not part of the main text, which makes the linkage DB very cluttered.

So, I set out to parse the raw MediaWiki format all Wikipedia articles are written in, to get only the relevant links and in order. I call them contextual because they live inside the text and have context.

Initially I used string matching, and other complex string scraping parsing methods. It was a bust. There are too many end-cases to deal with. That is when I discovered PyParsing, the excellent parsing library. It did the job, and here are the results.

I’ve seen some examples of people who build motion parallax capable screens using Kinect, but as usual – they don’t share the code. Too bad.

Well this is your chance to see how it’s done, and it’s fairly simple as well.

I’m a fan of Last.fm online radio, and I have a habit of marking every good song that I hear as a “loved track”. Over the years I got quite a list, and so I decided to turn it into my jogging playlist. But for that, I need all the songs downloaded to my computer so I can put them on my mobile. While Last.fm does link to Amazon for downloading all the loved songs for pay, I’m going to walk the fine moral line here and suggest how you can download every song from existing free YouTube videos.

If it really bothers you, think of it as if I created a YouTube playlist and now I’m using my data plan to stream the songs off YT itself..

Moral issues resolved, we can move on to the scripting.

Update (4/27/12): youtube-dl.py has moved: https://github.com/rg3/youtube-dl/, and also added a very neat –extract-audio option so you can get the songs in audio right away (it basically does a conversion in a second step).