So I was contacted earlier by someone asking about the Head Pose Estimation work I put up a while back. And I remembered that I needed to go back to that work and fix some things, so it was a great opportunity.

So I was contacted earlier by someone asking about the Head Pose Estimation work I put up a while back. And I remembered that I needed to go back to that work and fix some things, so it was a great opportunity.

I ended up making it a bit nicer, and it’s also a good chance for us to review some OpenCV-OpenGL interoperation stuff. Things like getting a projection matrix in OpenCV and translating it to an OpenGL ModelView matrix, are very handy.

Let’s get down to the code.

Using PnP

Basically nothing has changed from last time: I use PnP to get the 6DOF pose of the head from point-correspondences. The correspondences I pick out manually beforehand, but getting the 2D position of: Left Eye, Right Eye, Left Ear, Right Ear, Left Mouth, Right Mouth and Nose. Then I used a 3D model of a female head from TurboSquid (here) to get 3D points of the same features, simply using MeshLab’s “Get Info” selector.

Solving a PnP (Perspective-N-Point) problem is good when you have 2D-3D correspondences and want to get the 3D object’s orientation (6DOF).

I ended up with a set of 3D points

modelPoints.push_back(Point3f(2.37427,110.322,21.7776)); // l eye (v 314) modelPoints.push_back(Point3f(70.0602,109.898,20.8234)); // r eye (v 0) modelPoints.push_back(Point3f(36.8301,78.3185,52.0345)); //nose (v 1879) modelPoints.push_back(Point3f(14.8498,51.0115,30.2378)); // l mouth (v 1502) modelPoints.push_back(Point3f(58.1825,51.0115,29.6224)); // r mouth (v 695) modelPoints.push_back(Point3f(-61.8886,127.797,-89.4523)); // l ear (v 2011) modelPoints.push_back(Point3f(127.603,126.9,-83.9129)); // r ear (v 1138)

And 2D points

102 108 144 114 116 136 104 152 132 153 96 100 198 106

for every image of Angelina that I had.

The next step is pretty obvious – we solve the PnP:

void loadWithPoints(Mat& ip, Mat& img) {

int max_d = MAX(img.rows,img.cols);

camMatrix = (Mat_<double>(3,3) << max_d, 0, img.cols/2.0,

0, max_d, img.rows/2.0,

0, 0, 1.0);

cout << "using cam matrix " << endl << camMatrix << endl;

double _dc[] = {0,0,0,0};

solvePnP(op,ip,camMatrix,Mat(1,4,CV_64FC1,_dc),rvec,tvec,false,CV_EPNP);

Mat rotM(3,3,CV_64FC1,rot);

Rodrigues(rvec,rotM);

double* _r = rotM.ptr<double>();

printf("rotation mat: \n %.3f %.3f %.3f\n%.3f %.3f %.3f\n%.3f %.3f %.3f\n",

_r[0],_r[1],_r[2],_r[3],_r[4],_r[5],_r[6],_r[7],_r[8]);

printf("trans vec: \n %.3f %.3f %.3f\n",tv[0],tv[1],tv[2]);

double _pm[12] = {_r[0],_r[1],_r[2],tv[0],

_r[3],_r[4],_r[5],tv[1],

_r[6],_r[7],_r[8],tv[2]};

Matx34d P(_pm);

Mat KP = camMatrix * Mat(P);

// cout << "KP " << endl << KP << endl;

//reproject object points - check validity of found projection matrix

for (int i=0; i<op.rows; i++) {

Mat_<double> X = (Mat_<double>(4,1) << op.at<float>(i,0),op.at<float>(i,1),op.at<float>(i,2),1.0);

// cout << "object point " << X << endl;

Mat_<double> opt_p = KP * X;

Point2f opt_p_img(opt_p(0)/opt_p(2),opt_p(1)/opt_p(2));

// cout << "object point reproj " << opt_p_img << endl;

circle(img, opt_p_img, 4, Scalar(0,0,255), 1);

}

rotM = rotM.t();// transpose to conform with majorness of opengl matrix

}

solvePnP gives us a rotation matrix and a translation vector. Luckily we can simply use them in OpenGL to render, like we do in Augmented Reality, but note that I’m transposing the rotation matrix because OpenGL is Column-Major, not Row-Major like OpenCV (see here).

I also added a small check for reprojection of the 3D points back on the image, just to visualize that the fitting is almost never 100%.

A word about OpenCV and OpenGL

I created a tiny reusable piece of code, that goes with me whenever I mix OpenCV and OpenGL. Basically all I have there are functions to load up textures from OpenCV Mats into OpenGL textures and draw them to the raster.

void copyImgToTex(const Mat& _tex_img, GLuint* texID, double* _twr, double* _thr);

typedef struct my_texture {

GLuint tex_id;

double twr,thr,aspect_w2h;

Mat image;

my_texture():tex_id(-1),twr(1.0),thr(1.0) {}

bool initialized;

void set(const Mat& ocvimg) {

ocvimg.copyTo(image);

copyImgToTex(image, &tex_id, &twr, &thr);

aspect_w2h = (double)ocvimg.cols/(double)ocvimg.rows;

}

} OpenCVGLTexture;

void glEnable2D(); // setup 2D drawing

void glDisable2D(); // end 2D drawing

OpenCVGLTexture MakeOpenCVGLTexture(const Mat& _tex_img); // create an OpenCV-OpenGL image

void drawOpenCVImageInGL(const OpenCVGLTexture& tex); // draw an OpenCV-OpenGL image

Very basic stuff, just binding and uploading textures and drawing them in 2D to the screen.

One more sorta useful thing is getting the pixels back from OpenGL after rendering:

void saveOpenGLBuffer() {

static unsigned int opengl_buffer_num = 0;

int vPort[4]; glGetIntegerv(GL_VIEWPORT, vPort);

Mat_<Vec3b> opengl_image(vPort[3],vPort[2]);

{

Mat_<Vec4b> opengl_image_4b(vPort[3],vPort[2]);

glReadPixels(0, 0, vPort[2], vPort[3], GL_BGRA, GL_UNSIGNED_BYTE, opengl_image_4b.data);

flip(opengl_image_4b,opengl_image_4b,0);

mixChannels(&opengl_image_4b, 1, &opengl_image, 1, &(Vec6i(0,0,1,1,2,2)[0]), 3);

}

stringstream ss; ss << "opengl_buffer_" << opengl_buffer_num++ << ".jpg";

imwrite(ss.str(), opengl_image);

}

You can use this just for getting the image, and not saving to file.

Visualizing the results

My display function is pretty straightforeward, but I’ll show it here anyway:

void display(void)

{

// draw the image in the back

int vPort[4]; glGetIntegerv(GL_VIEWPORT, vPort);

glEnable2D();

drawOpenCVImageInGL(imgTex);

glTranslated(vPort[2]/2.0, 0, 0);

drawOpenCVImageInGL(imgWithDrawing);

glDisable2D();

glClear(GL_DEPTH_BUFFER_BIT); // we want to draw stuff over the image

// draw only on left part

glViewport(0, 0, vPort[2]/2, vPort[3]);

glPushMatrix();

gluLookAt(0,0,0,0,0,1,0,-1,0);

// put the object in the right position in space

Vec3d tvv(tv[0],tv[1],tv[2]);

glTranslated(tvv[0], tvv[1], tvv[2]);

// rotate it

double _d[16] = { rot[0],rot[1],rot[2],0,

rot[3],rot[4],rot[5],0,

rot[6],rot[7],rot[8],0,

0, 0, 0 ,1};

glMultMatrixd(_d);

// draw the 3D head model

glColor4f(1, 1, 1,0.75);

glmDraw(head_obj, GLM_SMOOTH);

//----------Axes

glScaled(50, 50, 50);

drawAxes();

//----------End axes

glPopMatrix();

// restore to looking at complete viewport

glViewport(0, 0, vPort[2], vPort[3]);

glutSwapBuffers();

}

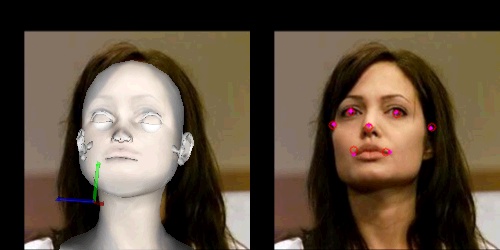

I first draw the OpenCV images on the raster, then add in the 3D head model.

Note that I’m using the exact results I got from solvePnP – the variables rot and tvec.

For some strange reason, I’m getting some wonky faces on the 3D model… I tried using MeshLab to fix all the faces, vertices, normals, etc. but to no avail. Can you tell what is the problem?

Results

Here’s a montage of all the results:

Code and Salutations

Code is up at the GitHub: https://github.com/royshil/HeadPosePnP

Thanks for tuning in!

Roy.

17 replies on “Head Pose Estimation with OpenCV & OpenGL Revisited [w/ code]”

Hi there,

I´m into POSIT now and I still don’t know what to put in the 2D and 3D vectors. I have a 3D image from which I can get the XYZ values for a determined point. As far as I understood, in the 2D vector should be the XY and in the 3D the XYZ. Right? Thanks.

hi,man, can u give me an example about mix the OpenGL and opengles in iOS?thanks you

Hi, man, when I compile your code, everything is OK except the function solvePnP is wrong. Could you do me a favor? Thank you. I’m looking forward your reply.

Hi, there!

Can I know which version do u use for OpenCV?

Because I’ve got a bunch of error by using openCv ver.2.1.

Im looking foward to your reply.

hi,man , i also want to ask the same question, why the solvePnp is broke?

Hi Roy,

I just had the doubt about the coordinate axis in green blue and red. What does that frame of reference belong to? is that the frame of reference of the camera or frame of reference of the world?. I am sorry it may be a silly doubt but please clear my doubt if possible.

Thanks

Hi Roy, thanks for sharing your code. I could compile your code, but solvePnP is giving error when running.

OpenCV Error: Assertion failed (dims <= 2 && data && (unsigned)i0 < (unsigned)si

ze.p[0] && (unsigned)(i1*DataType::channels) < (unsigned)(size.p[1]*channel

s()) && ((((sizeof(size_t)<> ((DataType::depth) & ((1 << 3

) – 1))*4) & 15) == elemSize1()) in unknown function, file C:\slave\WinInstaller

MegaPack\src\opencv\modules\core\include\opencv2/core/mat.hpp, line 542

It seems some data dimensions are not correct, I used your data as instructed.

Do you have any idea? Thank you very much.

hi everybody, i want to fix program. you instead function

solvePnP(op,ip,camMatrix,Mat(1,4,CV_64FC1,_dc),rvec,tvec,false,CV_EPNP);

using function

solvePnP(op,ip,camMatrix,Mat(1,4,CV_64FC1,_dc),rvec,tvec,true);

Great tutorial!!

I am working on the same problem of head pose estimation but I want to take it over android platform. Can you give me how can O work this problem out using android platform.

Hello Roy,

I tried running your program, but the CV_EPNP flag does not work with the solvePnP function. Do you know why that might be the case? I replaced it with CV_ITERATIVE, and it works. I’m not sure what the difference is and whether or not one is better than the other..any insight from you would be useful.

Thanks,

Harshi

[…] https://www.morethantechnical.com/2012/10/17/head-pose-estimation-with-opencv-opengl-revisited-w-code… […]

Hi, this is really cool (albeit creepy; that doll head freaks me out). I tried running the code and it seems to freeze after the first test image. It displays the direction vector, superimposes the model head on the subject, and marks fiducials on the original image, but it never continues on to the next image. Am I running the code wrong? I just did ./SHPE.

Hello Roy,

Your program is work perfectly. i have question, how to auto pickup 2D points, you know this mean? you .txt file have 2d point yes i know, but i want pickup from motion picture something like camera still frame. you have idea? or something else?

@Charlie

To get the landmarks you can use some kind of classifier with the features trained to find a landmark: ear, eye, tip of nose, corner of mouth, etc.

The classifiers probably won’t do a good job for a single point so you’d need to combine everything to find the best hypothesis. You can choose the classified features that for example are arranged in a way that makes sense in terms of a forward-facing human face (i.e. the mouth is below the eyes, etc.).

Hello Roy,

I tried running your pogram, and I got result like the picture. The color of the picture is weird, and I haven’t gotten the model display. Why?

the result

Hi Roy! great tutorial, there is any chanche to get which is the approach to make pose estimation realtime using the webcam?